Interactive Keras Captioning¶

Interactive multimedia captioning with Keras (Theano and Tensorflow). Given an input image or video, we describe its content.

Interactive captioning¶

Interactive-predictive pattern recognition is a collaborative human-machine framework for obtaining high-quality predictions while minimizing the human effort spent during the process.

It consists in an iterative prediction-correction process: each time the user introduces a correction to a hypothesis, the system reacts offering an alternative, considering the user feedback.

For further reading about this framework, please refer to Interactive Neural Machine Translation, Online Learning for Effort Reduction in Interactive Neural Machine Translation and Interactive-predictive neural multimodal systems.

Features¶

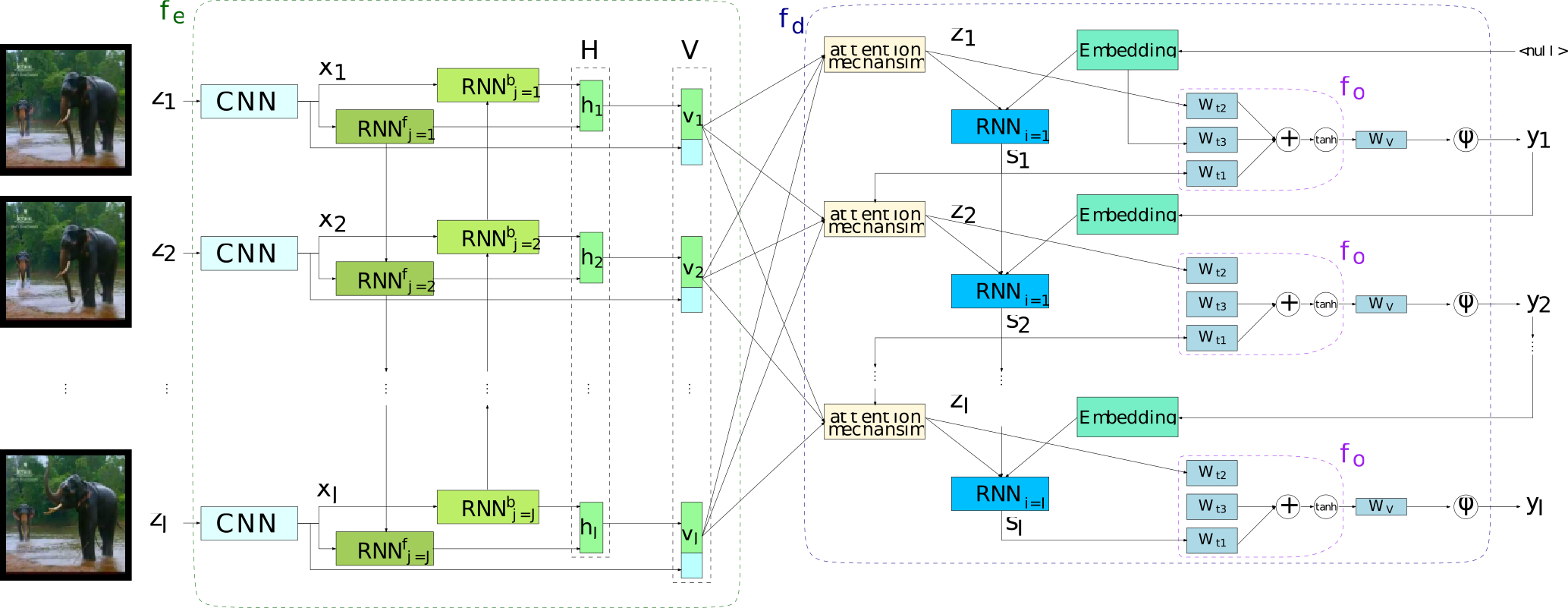

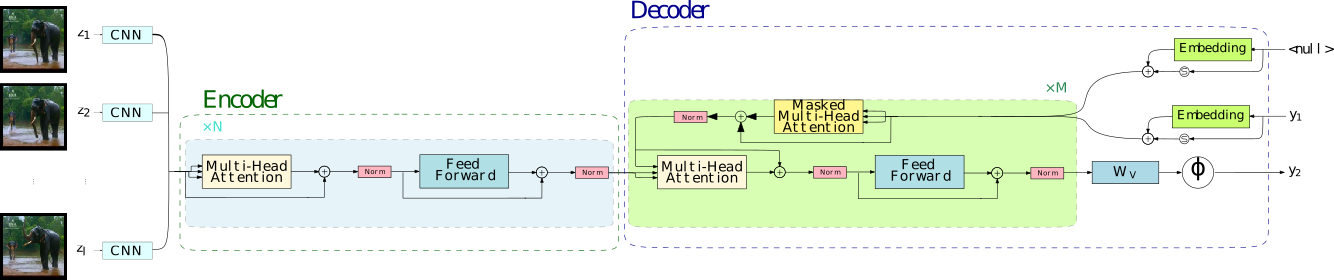

- Attention-based RNN and Transformer models.

- Support for GRU/LSTM networks: - Regular GRU/LSTM units. - Conditional GRU/LSTM units in the decoder. - Multilayered residual GRU/LSTM networks.

- Attention model over the input sequence of annotations. - Supporting Bahdanau (Add) and Luong (Dot) attention mechanisms. - Also supports double stochastic attention.

- Peeked decoder: The previously generated word is an input of the current timestep.

- Beam search decoding. - Featuring length and source coverage normalization.

- Ensemble decoding.

- Caption scoring.

- N-best list generation (as byproduct of the beam search process).

- Use of pretrained (Glove or Word2Vec) word embedding vectors.

- MLPs for initializing the RNN hidden and memory state.

- Spearmint wrapper for hyperparameter optimization.

- Client-server architecture for web demos.

Guide¶

- Installation

- Usage

- Configuration options

- Naming and experiment setup

- Options for input data type ‘*-features’

- Options for input data type ‘raw-image’

- Output data

- Input/output mappings

- Evaluation

- Decoding

- Search normalization

- Sampling

- Word representation

- Text representation

- Output text

- Optimization

- Learning rate schedule

- Training options

- Early stop

- Model main hyperparameters

- AttentionRNNEncoderDecoder model

- Transformer model

- Regularizers

- Tensorboard

- Storage and plotting

- Modules

- Contact